Generative AI capabilities are rapidly changing the customer service space. You can use Copilot in Dynamics 365 Customer Service today to help agents save time on case and conversation summarization as these features do not require your organization’s support knowledge. However, before agents can use Copilot to answer questions and draft emails, you need to ensure Copilot is using accurate knowledge content.

Good knowledge hygiene is key to bringing Copilot capabilities to life. For Copilot to successfully ingest, index, and surface the right knowledge asset, it’s important to ensure each asset meets defined ingestion criteria. Also, preparing knowledge assets for Copilot ingestion is not a finite process. It is essential to keep ingested knowledge assets in sync with upstream sources, and use proper curation and governance practices.

While every organization has its own unique systems, we aim to provide a general set of best practices for creating and maintaining your Copilot corpus. We’ll cover four main topics here:

- Defining the business case

- Establishing data quality and compliance standards

- Understanding the content lifecycle and integrating feedback

- Measuring success

Defining the business case

It is imperative that you look at your organization’s goals holistically to ensure they align with the content you intend to surface. Consult with different roles in each line of business to capture the different types of content they already use or will need. Determine the purpose of each content element to ensure its function and audience are clear. Look at your organization’s common case management workflows that require knowledge to see the greatest impact on productivity.

You may want to take a phased approach to roll out Copilot capabilities to different parts of your organization. The use case for each line of business will enable you to create a comprehensive plan that will be easier to execute as you include more agents. Administrators can create agent experience profiles to determine which groups of agents can begin using Copilot and when.

For example, there may be some lines of business that are more adherent to your established content strategy. Consider deploying to these businesses first. This will create an opportunity to observe and account for variables within your businesses which today are under the surface.

Establishing data quality and compliance standards

Identify the correct combination of content measures and values before bringing content into your Copilot corpus. Careful preparation at this stage will ensure Copilot surfaces the right content to your agents.

The following is a general list of must-haves for high-performing knowledge content:

- Intuitive title and description

- Separate sections with descriptive subheadings

- Use plain language and avoid technical jargon

- No knowledge asset attachments; convert them into individual knowledge assets

- No excessively long knowledge assets; break them into individual knowledge assets

- No broken or missing hyperlinks in the content body

- Descriptions for any images that appear in knowledge assets; Copilot cannot read text on images

- No customer or personal information about employees, vendors, etc.

- A review process for authoring, reviewing, and publishing articles

- A log of all actions related to ingesting, checking, and removing knowledge assets

If you’re storing knowledge assets in Dataverse, they should always be the latest version and in Published status.

Understanding the content lifecycle and integrating feedback

As mentioned above, clearly defined processes for authoring, reviewing, publishing, synchronizing, curating, and governing knowledge assets will help ensure Copilot surfaces responses based on the most recent knowledge assets. Determine which roles in your organization will author knowledge assets and the review process they will use to ensure accuracy.

After publishing a knowledge asset, determine how your organization will gather feedback to signal when to update or deprecate the asset. Set an expiration date for each asset so you have a checkpoint at which you can determine whether to update or remove it.

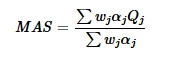

You can use the helpful response rate (HRR) to gather initial agent feedback. HRR is the number of positive (thumbs-up) ratings for each interaction divided by the total ratings (thumbs up + thumbs down). You can correlate this feedback with the knowledge assets Copilot cites in its responses. Gather more detailed feedback by creating a system that enables users to request reviews, report issues, or suggest improvements.

Measuring success

While knowledge management is an ongoing process, so is its measurement. You’ll want to periodically track usage and performance to ensure Copilot is useful to agents and identify areas for improvement.

Tracking analytics

First, you can measure the performance of your knowledge content based on the purpose you outlined at the beginning. You can view some metrics directly within your Customer Service environment. To view Copilot analytics, go to Customer Service historical analytics and select the Copilot tab. Here, comprehensive metrics and insights provide a holistic perspective on the value that Copilot adds to your customer service operations.

You can also build your own Copilot interaction reports to see measurements such as number of page views for each knowledge asset, the age of the asset, and whether the agent used the cited asset. The asset age is based on the date it was ingested by Copilot, so it’s important to ensure publication and ingestion cycles align.

Serving business processes

Some other key metrics that you’ll want to consider will be more closely tied to your organization’s business processes. Some examples include:

- Number of cases related to a knowledge article

- Number of escalations prevented

- Time saved when agents access these articles

- Costs saved from reduced escalations and troubleshooting time

Overall, introducing and expanding Copilot capabilities in your CRM is an iterative and ongoing process. Include stakeholders from every role to ensure your organization is using Copilot to help solve the right problems and enhance the agent experience.

AI solutions built responsibly

Enterprise grade data privacy at its core. Azure OpenAI offers a range of privacy features, including data encryption and secure storage. It allows users to control access to their data and provides detailed auditing and monitoring capabilities. Copilot is built on Azure OpenAI, so enterprises can rest assured that it offers the same level of data privacy and protection.

Responsible AI by design. We are committed to creating responsible AI by design. Our work is guided by a core set of principles: fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability. We are putting those principles into practice across the company to develop and deploy AI that will have a positive impact on society.

Learn more

For more information, read the documentation: Use Copilot to solve customer issues | Microsoft Learn