You chose Dynamics 365 for your enterprise to improve visibility and to transform your business with insights. Is it a challenge to provide timely insights? Does it take too much effort to build and maintain complex data pipelines?

If your solution includes using a data lake, you can now simplify data pipelines to unlock the insights hidden in that data by connecting your Finance and Operations apps environment with a data lake. With general availability of the Export to Data Lake feature in Finance and Operations apps, data from your Dynamics 365 environment is readily available in Azure Data Lake.

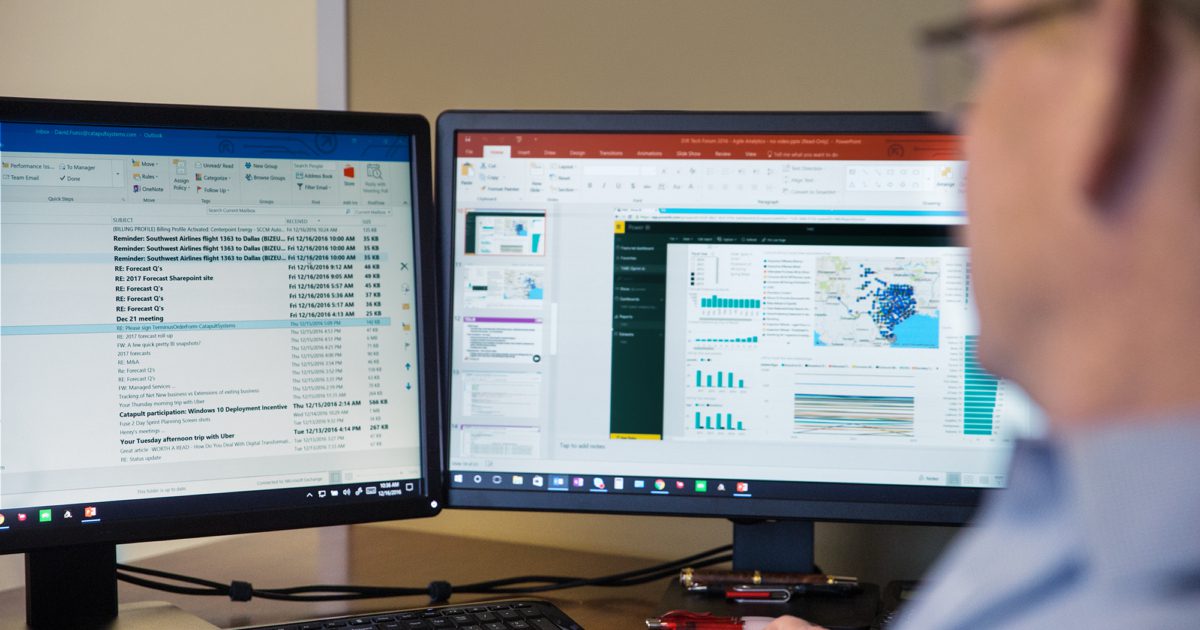

Data lakes are optimized for big data analytics. With a replica of your Dynamics 365 data in the data lake, you can use Microsoft Power BI to create rich operational reports and analytics. Your data engineers can use Spark and other big data technologies to reshape data or to apply machine learning models. Or you can work with a data lake the same way that you work with data in a SQL database. Serverless SQL pool endpoints in Azure Synapse Analytics conveniently lets you query big data in the lake with Transact-SQL (T-SQL).

Why limit yourself to business data? You can ingest legacy data from previous systems as well as data from machines and sensors at a fraction of the cost incurred when storing data in a SQL data warehouse. You can easily mash-up business data with signals from sensors and machines using Azure Synapse Analytics. You can merge signals from the factory floor with production schedules, or you can merge web logs from e-commerce sites with invoices and inventory movement.

Can’t wait to try this feature? Here are the steps you need to follow.

Install the Export to Data Lake feature

The Export to Data Lake feature is an optional add-in included with your subscription to Dynamics 365. This feature is generally available in certain Azure regions: United States, Canada, United Kingdom, Europe, South East Asia, East Asia, Australia, India, and Japan. If your Finance and Operations apps environment is in any of those regions, you can enable this feature in your environment. If your environment isn’t in one of the listed regions, complete the survey and let us know. We will make this feature available in more regions in the future.

To begin to use this feature, your system administrator must first connect your Finance and Operations apps environment with an Azure Data Lake and provide consent to export and use the data.

To install the Export to Data Lake feature, first launch the Microsoft Dynamics Lifecycle Services portal and select the specific environment where you want to enable this feature. When you choose the Export to Data Lake add-in, you also need to provide the location of your data lake. If you have not created a data lake, you can create one in your Azure subscription by following the steps in Install Export to Azure Data Lake add-in.

Choose data to export to a data lake

After the add-in installation is complete, you and your power users can launch the environment for a Finance and Operations app and choose data to be exported to a data lake. You can choose from standard or customized tables and entities. When you choose an entity, the system chooses all the underlying tables that make up the entity, so there is no need to choose tables one by one.

Once you choose data, the system makes an initial copy of the data in the lake. If you chose a large table, the initial copy might take a few minutes. You can see the progress on screen. After the initial full copy is done, the system shows that the table is in a running state. At this point, all the changes occurring in the Finance and Operations apps are updated in the lake.

That’s all there is to it. The system keeps the data fresh, and your users can consume data in the data lake. You can see the status of the exports, including the last refreshed time on the screen.

Work with data in the lake

You’ll find that the data is organized into a rich folder structure within the data lake. Data is sorted by the application area, and then by module. There’s a further breakdown by table type. This rich folder structure makes it easy to organize and secure your data in the lake.

Within each data folder are CSV files that contain the data. The files are updated in place as finance and operations data is modified. In addition, the folders contain metadata that is structured based on the Common Data Model metadata system. This makes it easy for the data to be consumed by Azure Synapse, Power BI, and third-party tools.

If you would like to use T-SQL to work with data in Azure Data Lake, as if you are reading data from a SQL database, you might want to use the CDMUtil tool, available from GitHub. This tool can create an Azure Synapse database. You can query the Synapse database using T-SQL, Spark, or Synapse pipelines as if you are reading from a SQL database.

You can make the data lake into your big data warehouse by bringing data from many different sources. You can use SQL or Spark to combine the data. You can also create pipelines with complex transforms. And then, you can create Power BI reports right within Azure Synapse. Simply choose the database and create a Power BI dataset in one step. Your users can open this dataset in Power BI and create rich reports.

Next steps

Read an Export to Azure Data Lake overview to learn more about the Export to Data Lake feature.

For step-by-step instructions on how to install the Export to Data Lake add-in, see Install Export to Data Lake add-in.

We are excited to release this feature to general availability. You can also join the preview Yammer group to stay in touch with the product team as we continue to improve this feature.